Dreaming and Doing Haptics

April 26, 2020essay,

By examining two examples of haptic technologies – the Taptic Engine and the TESLASUIT – David Parisi asks how we should evaluate their utopian and transformative claims. Parisi also reflects on the potential dangers of these haptic devices: who has access to and control of the tactile data that haptic technologies capture, store, and transmit? What new violence will be inflicted against bodies? Whose touch will be extended into virtual worlds and over physical space, and whose bodies will be excluded from these haptic networks? This essay is part of the publication and research project of Open! about the sense of touch in the digital age.

Dreaming Haptics

For over thirty years, we have been waiting for the dream of haptics to come true. Popular press depictions of touch technologies, such as Howard Rheingold’s 1991 book Virtual Reality: The Revolutionary Technology of Computer-Generated Worlds, have portrayed haptic devices as technologies of an imminent future that promise to liberate our repressed sense of touch from the shackles of audio-visual media. The inevitable arrival of haptics, we have been told, will usher in a new mode of interacting not just with computers, but also with other subjects in our communicative networks. According to haptics marketers and engineers, adding touch feedback to computers would make our interactions with them – and with each other – more natural, more holistic, and more engaging. Layering haptics onto existing audio-visual media systems, in this narrative, will not just be additive, but transformative, giving touch a new centrality in the configuration of the mediated sensorium, and allowing us to extend and amplify our sense of touch, just as these audio-visual media had previously extended our senses of seeing and hearing. Channelling a haptocentric humanist tradition that positions touch as both a vital and neglected experiential modality,1 haptics proponents frame touch technology as a means of restoring contact with touch itself, a way to rediscover touch’s power as an epistemic agent. In the dream of haptics, we can regain our lost humanity by seamlessly fusing with touch’s technological extensions.

As haptics engineers have looked towards some promised future that seems always just on the horizon – a future that has been suspended for over thirty years in a state of perpetual immanence – haptics technologies have gradually worked their way into a range of technologies, primarily in the form of vibratory communication. Our smartphones buzz in familiar patterns to indicate incoming messages, fitness trackers explode with vibration on our wrists to let us know we’ve reached a prescribed goal, sensitive screens jolt our fingertips with vibration cues to replace the lost sensations of pressing buttons and keys, video game controllers rumble in our hands to provide a sense of embodied tactile presence in computer-generated environments, cars use vibratory messages to warn us of impending danger, networked sex toys provide remote titillation controllable via smartphone apps, and pressure-sensitive toothbrushes even employ sequenced interruptions in vibration patterns to help us modulate the force we apply when cleaning our teeth. Even by conservative estimates, there are over four billion haptics-enabled devices in use around the world.

Perpetual Infancy

Despite this ubiquity and abundance of haptics technologies, they are all inadequate, for haptics proponents, to fulfil the categorical and transformative promises of haptics writ large. In a 2019 interview, Immersion Corporation Chief Technology Officer and twenty-year industry veteran Chris Ullrich echoed the commonplace sentiment that ‘we are in the infancy of this technology, so the potential for haptics is great’.2 Such pronouncements about the early lifecycle of haptics technologies – and their potential for inevitable further growth – have been made frequently throughout the field’s history, with little acknowledgement that the repeated promises of haptics’ impending rapid maturation remain unfulfilled. In psychology, haptics (‘the doctrine of touch’, as Edward Bradford Titchener defined it) has been a dedicated field of study since at least the 1890s; the Cutaneous Communication Lab at Princeton University dedicated to exploring systems for the electrical transmission of tactile messages, was active from 1962 to 2004; the Institute of Electrical and Electronics Engineers held its first Haptics Symposium in 1992; Computer Haptics announced its formalized status as a ‘new discipline’ in 19973; engineers, computer scientists, and other researchers have penned tens of thousands of scholarly articles on virtualizing touch, hundreds of new patents for haptics technologies are granted each year (with one single company holding over 3,500), and the long history of haptics engineering has resulted in scores of devices brought to market (as detailed in Hasti Seifi’s Haptipedia project). Whether the year is 2000 or 2020, current-generation devices always portend the development of something richer and more robust.

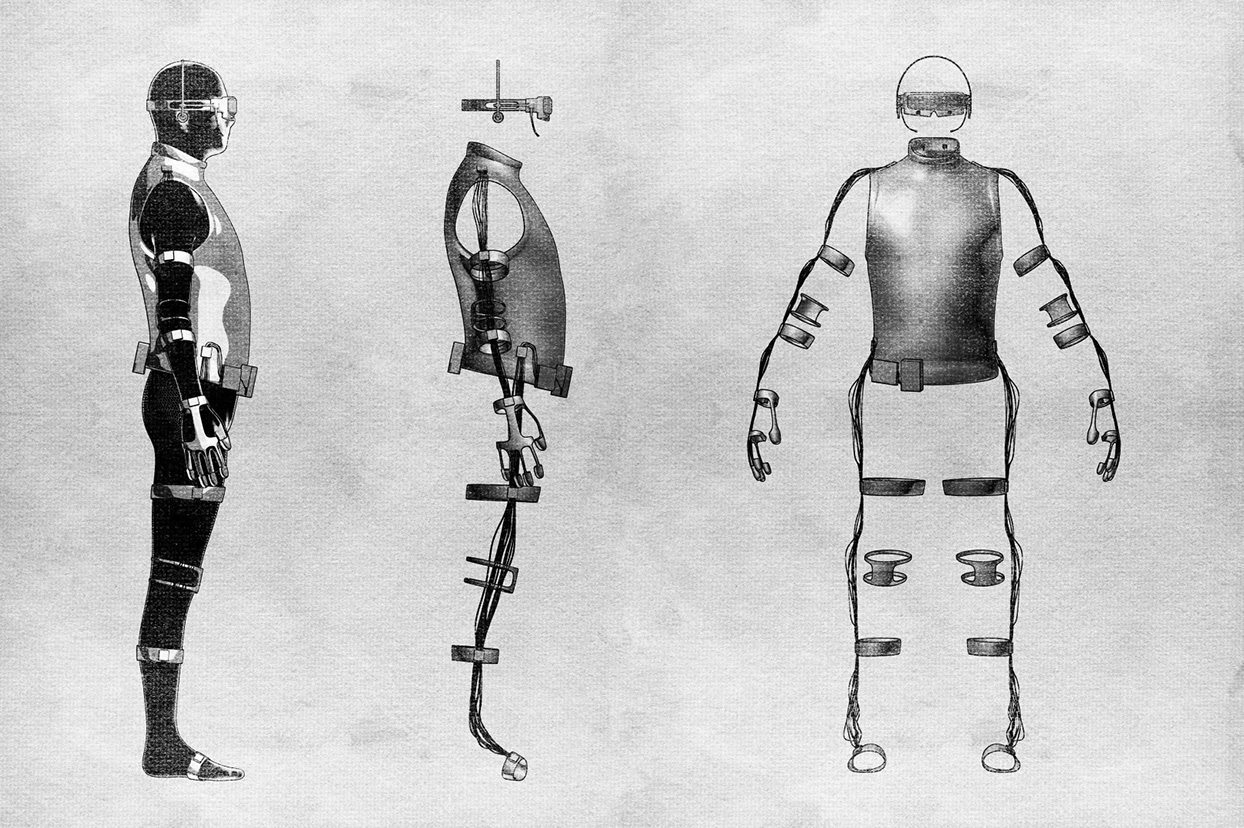

Virtual reality’s reawakening in the 2010s sparked the development of a new wave of haptic feedback devices for VR, bringing renewed cultural attention to these long-promised utopian possibilities for extending touch into virtual and computer-generated worlds. Devices like the HaptX VR glove, TESLASUIT (haptic shirt and pants), Bebop Sensors Forte Data Glove, UltraLeap’s (formerly UltraHaptics) tangible hologram, TEGway’s ThermoReal system for temperature feedback, haptic controllers from Microsoft, and Contact CI’s haptic VR gloves, in conjunction with the cinematic depiction of a high-fidelity haptic bodysuit in Steven Spielberg’s 2018 film Ready Player One, have prompted speculation in the popular press that we are poised to finally enter an era of omnipresent haptic bodysuits and gloves. In an age of seemingly relentless dematerialization – where physical objects and physical spaces are increasingly being replaced by audio-visual signifiers of their presence – it is easy to understand the seductive lure of devices that offer to restore the world’s lost tangible materiality.

However, these more robust instantiations of haptics technology present a two-fold problem. First, from a practical standpoint, they are prohibitively expensive to develop and especially costly to manufacture, meaning that it is difficult for these devices to achieve any sort of widespread adoption. Consequently, there is little incentive to learn to encode haptic data for the devices that do exist: authoring content for these devices is effectively writing for an imaginary haptic audience. Haptics proponents take it as an article of technodeterministic faith that these issues will be overcome, given enough time and continued investment, but the production costs of motors and other actuators for higher-end devices have remained stubbornly high over the past few decades. Second, this situation presents a methodological challenge. How are we to evaluate the utopian and transformative claims mobilized around haptics technologies, if the technologies required to bring about these positive transformations still exist only in research labs and carefully orchestrated tech demonstrations? And conversely: the dangers of these devices are still largely abstract; they won’t be fully manifest until (and unless) they see a widespread uptake. What new vulnerabilities is a body exposed to when it slides into a haptic suit or enters a haptic exoskeleton? Who will have access to and control over the data of touch that haptics technologies capture, store and transmit? What new violence will be inflicted upon bodies, for the sake of forward progress in haptics research? Whose touch will be extended into virtual worlds and across physical space, and whose bodies will be excluded from these haptic networks? Who will govern and regulate the flow of haptic data through information networks? In short: how do we anticipate and grapple with the consequences of technologies that remain largely unrealized?

To address these questions, I examine two examples of haptics technologies, one that is already ubiquitously deployed, and another that is still under development for a planned commercial release. In both cases, I am interested in understanding the material processes necessary for touch to pass through digital networks and devices, on the one hand, and the cultural processes involved in creating a demand for haptics technology, on the other. The successful proliferation of haptics technologies requires not only the invention and production of new and increasingly complex forms of touch technology, but also – and perhaps more crucially – the production of desire for haptics itself, accomplished through a sustained critique of visualist interfaces that situates haptics as an ameliorative corrective made necessary by the inadequacies of optical media. Finally, highlighting the fantasies expressed through both devices allows us to struggle with the ethical challenges haptics technologies present, tacitly pushing back on those curative and ameliorative narratives offered by proponents of the technology.

Doing Haptics I: The Taptic Engine

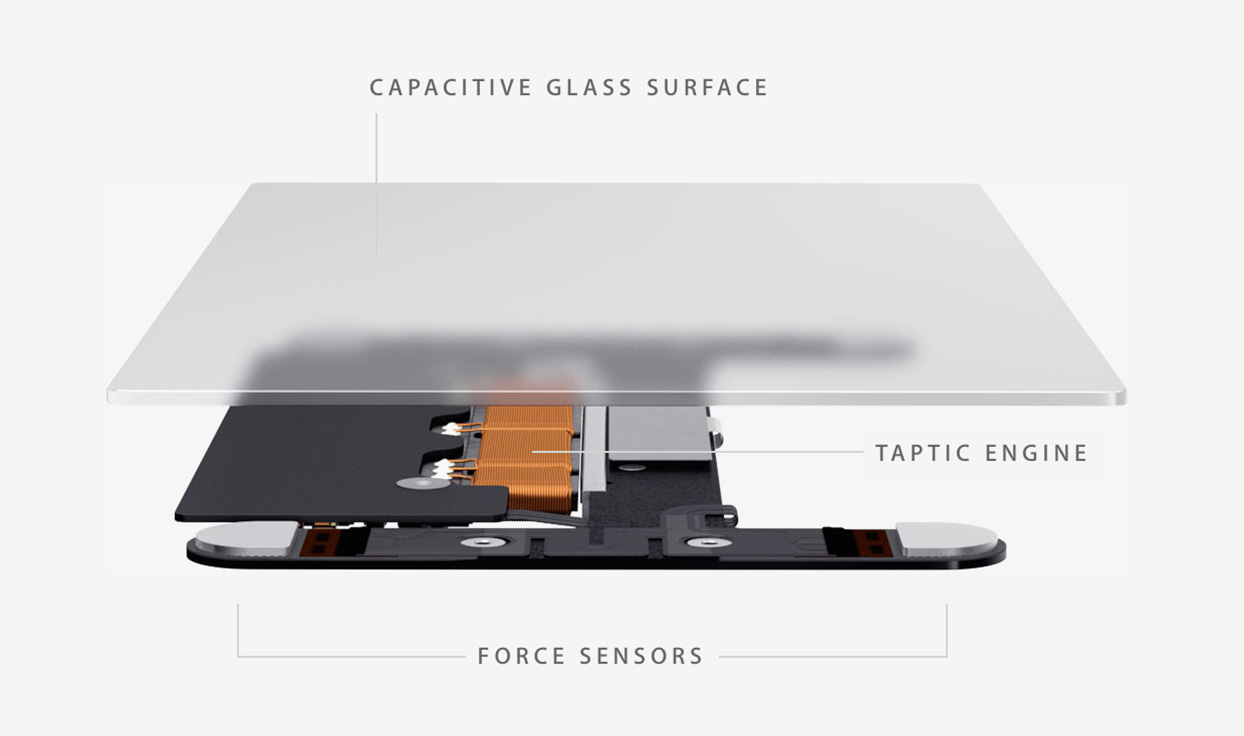

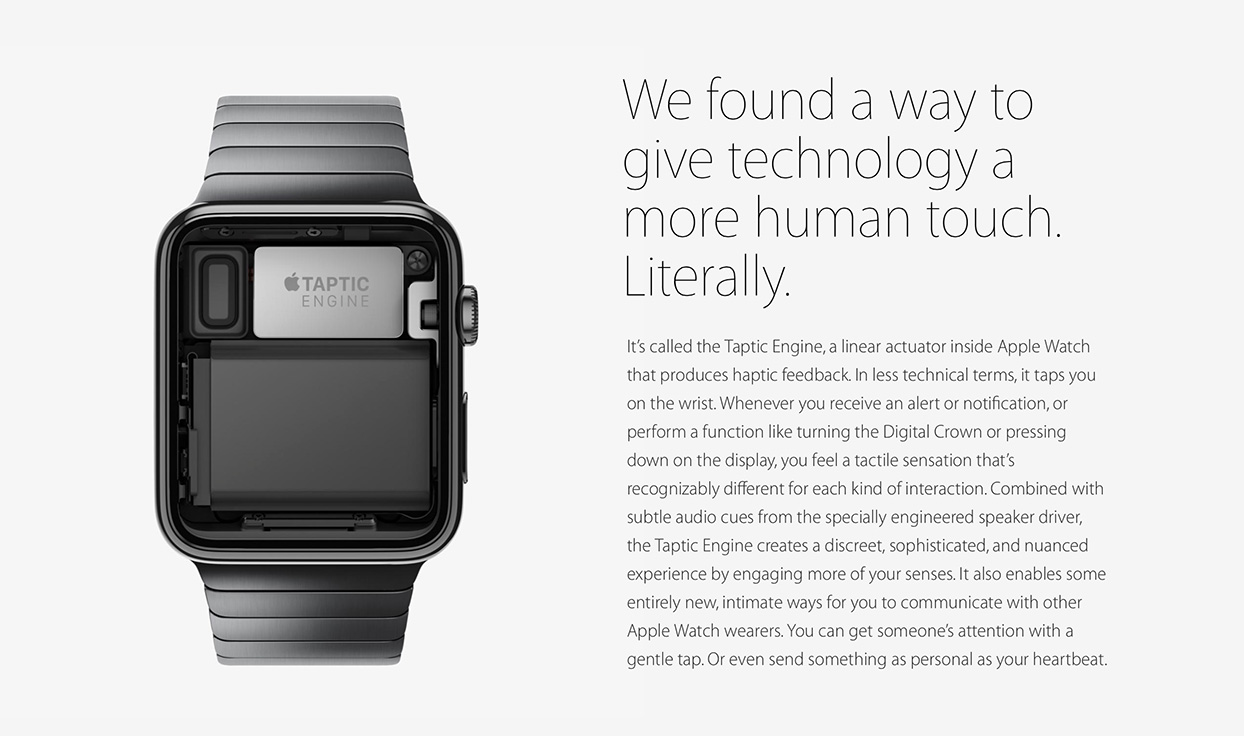

Debuting in the first generation Apple Watch in 2015, the Taptic Engine represented a substantial departure from previous forms of vibration feedback by using a Linear Resonance Actuator (LRA) instead of the Eccentric Rotating Mass (ERM) motors found in most mobile devices. This allowed the Apple Watch (and subsequent Apple phones that incorporated the Taptic Engine) to produce haptic alerts that were qualitatively different from those found in other companies’ devices, as LRAs provide more precise and controlled haptic signals. In an ad touting the feature, Apple claimed that this change in its mobile haptic signalling mechanism would usher in an advanced mode of tactile communication through wearables: ‘you feel a tactile sensation that’s recognizably different for each kind of interaction. […] [T]he Taptic Engine creates a discrete, sophisticated, and nuanced experience by engaging more of your senses. It also enables some entirely new, intimate ways for you to communicate with other Apple Watch wearers. You can get someone’s attention with a gentle tap. Or even send something as personal as a heartbeat.’

Despite Apple’s claims of novelty, the Taptic Engine sits comfortably in a much longer tradition of networked tactile alert systems, recalling Cold War-era military research into tactile languages by the US military that involved training radio operators to receive coded messages – sent either by vibration or electricity – through the skin. To calibrate tactile messages to the psychophysical capacity of their human receivers, these alert systems required detailed scientific knowledge about the skin’s discriminatory capacities at different sites on the body, along with the design and iterative development of a coding system for the various linguistic signs that would be passed through the skin. Although some of the vibrations and shocks these apparatuses produced were materially distinct, without calibrating signals to their human receivers, such differences in stimuli would have been imperceptible. Tactile information, like other forms of information, depends on ‘a difference which makes a difference’, as Gregory Bateson put it.4 Receivers of tactile messages had to develop a trained sensitivity to the fine differences between vibrations and electrical shocks, a sensitivity cultivated by rigorous and structured regimens modelled on the teaching of Morse Code to radio operators.

Crucially, such systems for passing information through touch were understood as a response to a crisis of the mid-twentieth century information environments, with the eyes and ears ‘assaulted so continuously’ by media that touch emerged as an alternative to the ‘seriously overburdened’ and ‘oversaturated’ visual and auditory channels.5 Touch communication provided a way to offload information from these exhausted channels to a purportedly under-exploited one, which psychologists and engineers previously thought to be incapable of receiving coded messages (Braille and other systems of touch communication did not constitute sufficient cause for overturning this assumption). The US military continued to fund this attempted redeployment and transformation of touch via technical apparatus throughout the twentieth century, and while the fantasy of deploying nuanced touch communication networks ubiquitously in the armed services was never realized, such research succeeded in establishing a framework of scientific facts, instruments and investigative techniques that would prove generative to subsequent engineers who took up the problem.

Apple’s efforts are also in continuity with more recent attempts at developing nuanced haptic alerts transmitted through mobile handsets. The haptics firm Immersion Corporation, for instance, developed a system called VibeTonz – customizable haptic ringtones that could be assigned to specific contacts and specific messages – widely deployed in Samsung handsets over a decade ago. Although Immersion’s marketing push was not as savvy or robust as Apple’s was for the Taptic Engine, we can find similar rhetoric in a 2004 press release, where the company claimed that VibeTonz ‘enables the phenomenon of sensory harmony, in which the combined effect of individual senses is greater than the sum of their parts. Adding touch sensations to sight and sound cues allows greater realism and intuition in the way people navigate and use their mobile phone’. The Taptic Engine, then, can be thought of not as a new interaction paradigm, but rather, as part of a gradual refashioning of the skin, where touch emerges as a crucial participant in our attention economy. Though much has been made of the excessive number of times we touch our devices (one recent study estimates 2,617 contacts per day), the manual manipulation of digital media is often cued by the passive reception of signals sent through touch – signals that capitalize on the new forms of intimate contact between digital devices and users’ bodies to open touch up as a communicative channel.

However, much of the transformative promise around the Taptic Engine – and tactile alerts more generally – remains unfulfilled. Unleashing the potential of these systems depends on mobilizing a network of communicative subjects who want to be trained to perceive the differences between tactile stimuli. An improved signalling system on its own is not enough; it needs users willing to create and decode the nuanced haptic messages relayed through the engine, users who will identify its tactile sensations as ‘recognizably different’ from each other, and from other modes of producing vibratory signals. Users need to be convinced that they want and need more complex forms of haptic signals in the first place.

Pushing back on Apple’s claims about the desirability of haptic alerts, we can consider how haptic alerts have already flooded the tactile channel with data. Smartphones, tablets, and wearables constantly buzz with violent urgency, demanding that we attend to them – part of what Christian Licoppe calls ‘the crisis of the summons’. With the increasing utilization of haptic alerts by app developers, we may already facing ‘haptic overload’, as the technology journalist Ben Lovejoy suggested, where the tactile channel is becoming overcrowded with alerts from private companies looking to use the channel as a pathway for value extraction. Increasing the volumes of haptic messages the skin is tasked with receiving may work against the desired effect, resulting in a numbness or indifference to tactile alerts, or prompting users to simply disable vibration alerts altogether – a sort of tragedy of the haptic commons.

Doing Haptics II: The TESLASUIT

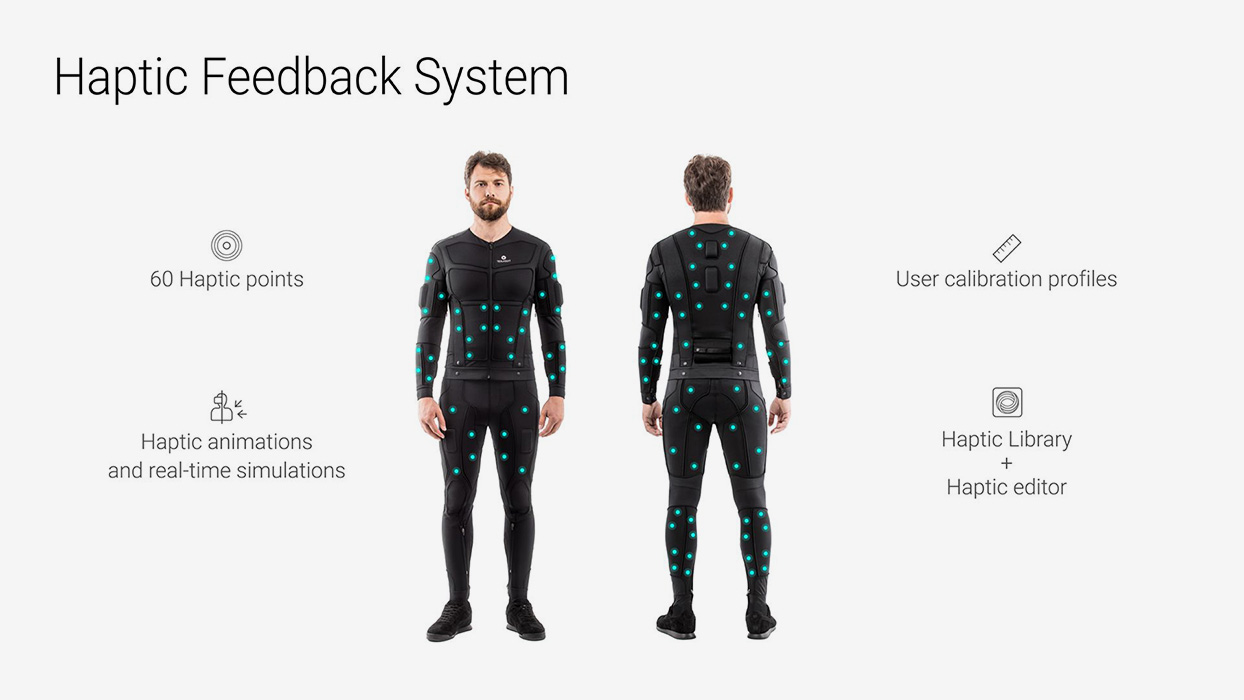

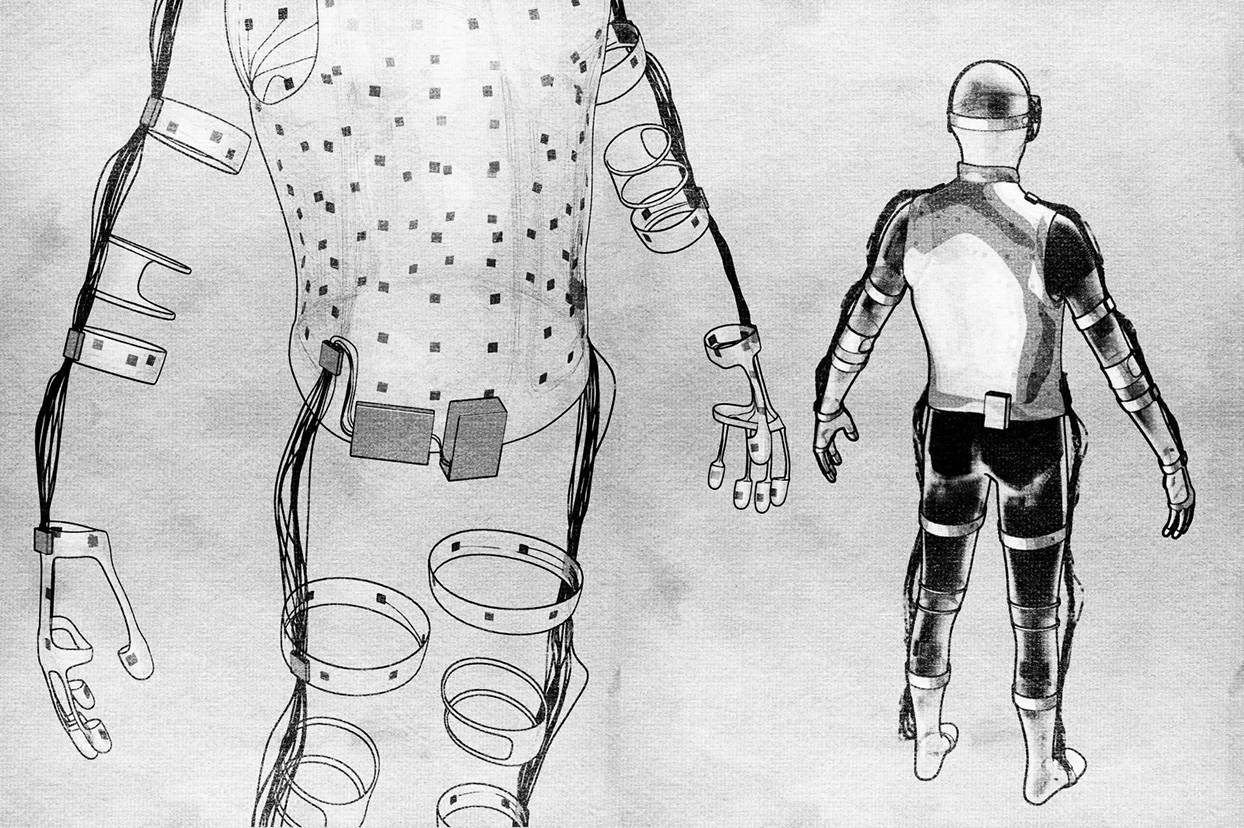

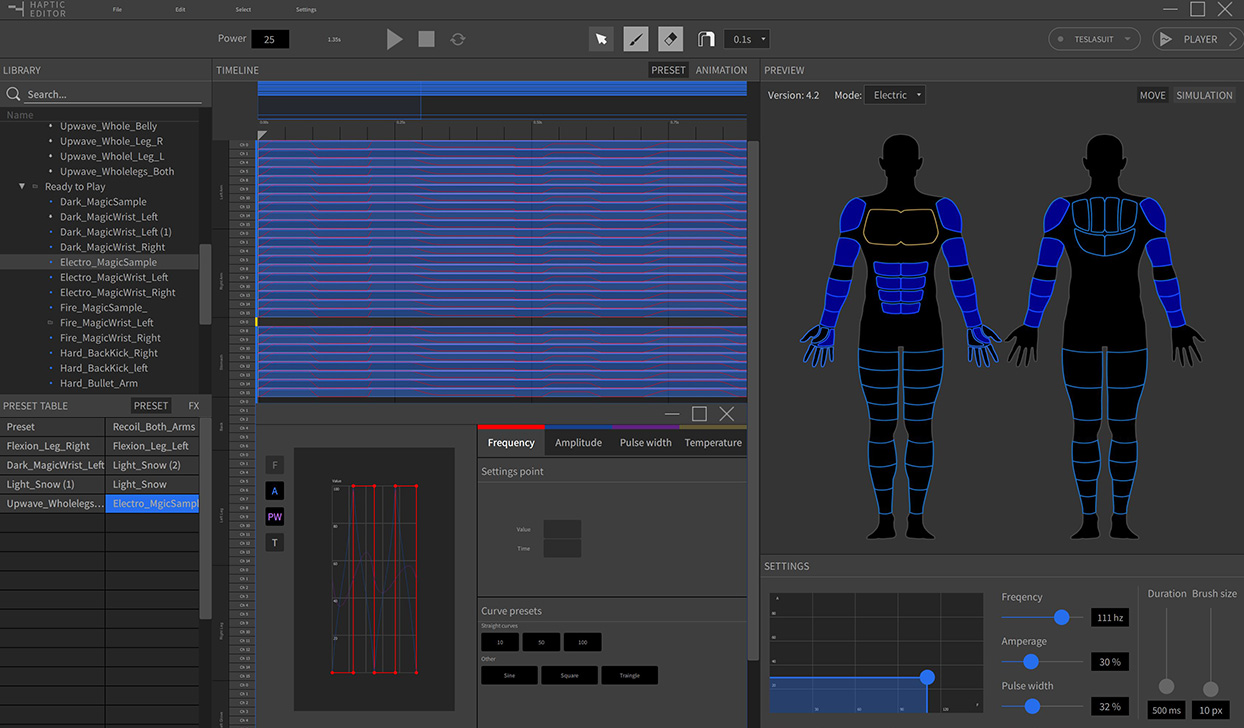

The TESLASUIT, a wearable haptic bodysuit still under development for eventual commercial release and unrelated to Elon Musk's Tesla, utilizes an array of eighty electrodes distributed throughout the suit to both stimulate the tactile nerves and assume control over the wearer’s muscles. The suit features a motion sensing system, for the capture and display of the wearer’s body in virtual space, along with electrocardiogram (EKG) and electrodermal activity (EDA) sensors that feed data about the wearer back to TESLASUIT’s software, allowing for the monitoring of their physiological responses to sensory stimuli. When it was initially announced in 2013, the TESLASUIT was marketed as an add-on for VR video gaming, as indicated by the product’s initial tagline ‘Feel What You Play’. Coordinating its various methods of stimulation, which included temperature display through an array of thermal control units distributed throughout the suit, would allow the wearer to differentiate sensations from a wide range of virtual objects and events: icicles, explosions, strokes, taps, and punches are each produced through a complex process of haptic effects editing, using TESLASUIT’s software.

After a failed Kickstarter campaign in 2016, which raised only £ 34,835 out of its £ 250,000 target, the company pivoted away from gaming as its primary use case to focus instead on enterprise applications, including industrial training, VR rehabilitation, and sports instruction. In an interview that year, TESLASUIT CEO Dimitri Mikhalchuk optimistically predicted that the suit would see rapid adoption, while also foreshadowing their eventual turn away from gaming applications: ‘we hope that in five years either our suits or the underlying technology will become as widespread as mobile phones, used for gaming, health care, design and remote communication.’ As Marketing Director Denis Dybsky explained to me during a demonstration, the suit’s ability to make the wearer ‘uncomfortable’ is vital to each of these applications: jolting trainees with an unpleasant shock whenever they deviate from a prescribed sequence of actions can function as a crude sort of aversion therapy. Moreover, the muscle control system allows the suit to force the wearer’s body to conform to a designated routine of movements choreographed by the suit’s software, working so well that the wearer cannot resist, ‘no matter how strong you are, if you’re a wrestling champion’. The use case Dybsky provided was of a golfer who wanted to learn to emulate Tiger Woods’ swing: modelling his swing, either through direct motion capture or by manual programming, the suit could control the wearer’s body, so that it follows the exact pathway Woods takes in the course of his swing. The addition of exoskeleton gloves to the suit (announced in December of 2019) further enhances TESLASUIT’s range of control over the body. Dybsky claims that industrial training applications – developed for workers training for work on oil rigs, where mistakes can prove fatal – will eventually prove to be life-saving, with workers learning to avoid injurious accidents by making painful errors within the shielded space of a virtual simulation (as depicted in their recent promotional video). In their framing, an audio-visual simulation on its own is inadequate: touch, and pain more specifically, is an essential component of effective and efficient pedagogy.6

In this functionality – the intentional ability to make the wearer uncomfortable – the TESLASUIT breaks from prior traditions in haptic interface design, which had mainly aimed at comfort maximization wherever possible. With rare exceptions such as art projects (////////fur////’s No Pain No Gain (formerly PainStation) and Eddo Stern’s Tekken Torture Tournament, interactive video game artworks that employed electrical stimulation as punishment for failing game actions) and BDSM electrostimulation devices, haptic interface designers have purposefully constrained the fidelity of haptic simulations, not out of any ethical concern, but rather, in the interest of successfully commercializing and marketing their devices. Such sacrifices in fidelity work against Ivan Sutherland’s initial blueprint for computer-controlled force feedback interfaces, put forward in his 1965 address ‘The Ultimate Display’, where he suggested that the computerized tactile simulation should be able to make virtual handcuffs confining and virtual bullets fatal. Though it stops short of fully manifesting Sutherland’s violent imaginary machine, the TESLASUIT is the closest in spirit to any haptics device ever designed for commercial release. It is an unquestionably impressively and ambitious piece of technology, the outcome of an iterative design process undertaken by an interdisciplinary team of over 100 researchers, from fields including electrical engineering, electrophysiology, robotics, haptics, and computer science. But the suit’s specific material configuration – particularly, its ability to inflict pain on the wearer – does not express and embody a natural model of touch. Rather, it shapes touch according to a capitalist process of product design and development. Specific design revisions throughout the design process, as Dybsky explained, were informed by consultations with corporate clients (especially in the oil and natural gas industries), who told TESLASUIT’s business development team that the suit’s ability to make the wearer uncomfortable was a feature they wanted to see retained and improved. Read in the context of workers being required to wear the suit in mandatory training sessions, repeatedly subjected to shocks and violently forced to perform sequences of computer-choreographed movement, the fantasy expressed by the suit acquires a darker character.

Despite its ambitions to accuracy, despite its willingness to cast off the restraints of comfort maximization, and despite the massive financial and intellectual resources poured into its development, the suit’s haptic stimuli still seem to betray their artifice. During my demonstration, the shocks the suit painted onto my skin felt like targeted bursts of electricity, rather than the objects the shocks were intended to represent (icicles blowing across the torso, in one scenario). The muscle stimulation system worked a bit better on this front: when the suit took control over my arms to simulate the recoil from firing guns, the motion produced by the handgun differed appreciably from the simulated kick of the sniper rifle. But the interface never seemed to disappear – the tactile signifiers of haptic events and objects called attention to themselves, rather than collapsing onto what they signified. The project of making the TESLASUIT function effectively as a representational technology, then, depends on training the wearer to triangulate these forced muscle movements and haptic pings of electricity with images (rendered by a VR headset) and sounds (produced by headphones). The TESLASUIT – like the Taptic Engine – requires its wearers to assimilate to a new regime of tactile signification.

The TESLASUIT may never see a commercial release; currently, the company is only taking orders for custom-fitted suits. But it nevertheless represents the sort of giant leap forward haptics prostheletizers like Ullrich have been waiting their entire careers for – potential breakthrough research that could bring about the long-forecasted technological awakening of touch. If the rapid adoption of the TESLASUIT happens – if the designers can scale the suit down enough that it becomes affordable for more users, and if the business development team can convince companies to incorporate the suit in their training programmes, and if the uptake of VR headsets more generally continues to rise at a steady though unspectacular pace – then we will have to confront a new set of questions and concerns around haptics. We will face a crucial tension over the ethics of haptic simulation: To what extent is there an obligation to faithfully and accurately reproduce or simulate a given haptic situation, and to what extent should the users of these devices be shielded from the full and perhaps even damaging consequences of simulated events? How does the materiality of specific haptics devices privilege some bodies over others? Whose fantasy of touch is embodied and expressed in the design of haptics technologies? What new use values do they hope to give to touch, through its technological replication and extension?

Undoing Haptics

These two case studies situate haptics as a reconstruction of touch that proceeds according to the imperatives of hegemonic capitalist technoscience. Both examples illustrate the way that corporations, by investing in haptics research, attempt a takeover of touch, subjecting it to new regimes of rationalized sense-making and framing tactility within the disciplining apparatus of haptics. These research programmes order touch as an exploitable economic resource: a means of facilitating more effective flows of data to the body, in the case of the Taptic Engine, or a way to more efficiently adapt the body to the demands of industrial resource extraction, in the case of the TESLASUIT. In these corporatized constructions of touch, haptics research provides a site for contesting and pushing back on hegemonic models of the technologized sensorium – a way to overcome and ameliorate the purportedly deleterious, distancing, and dematerializing consequences of audio-visual media. Discursive articulations of haptics technology, both by engineers and marketers, respond to our mediatic situation with the promise of restoring and rehabilitating touch through its technological reconstruction – suturing a new extension onto the mediated sensorium to undo its fragmentation by prior technologies of sensory extension. This promise of liberation and desubjectictification – a promise of freedom from control and governance by audio-visual technologies – through the embrace of touch technologies obscures the new modes of control and subjectification haptics requires.

To help evaluate the vast and sweeping predictions frequently advanced around touch technology, we need to confront the specific ways touch is expressed through and encoded by individual instantiations of haptics technologies. Each new touch technology necessarily involves a process of strategically replicating tactility – design decisions about which aspects of touch should be passed through digital networks, and which tactile sensations the interface will screen out. Moreover, haptics technologies, in both their design and marketing, construct a set of ideal users: haptic suits and gloves, in their material configurations, define the normative ergonomic body types that they can interface with, while marketing campaigns present a vision of the imagined user of the technology (the TESLASUIT’s initial promotional videos, for instance, showed only male bodies wearing the suit). Such explorations allow us to productively push back on narratives of technological inevitability that present haptics as lurching constantly towards an ever-greater degree of haptic fidelity, situating the technology’s continued development as contingent upon the ongoing cultural project of producing a demand for the technological replication of touch. Rather than waiting for some long-promised dream of an idealized haptics to come true, we should undo the dream altogether, replacing it with a sustained and attentive account of the ongoing ways touch continues to be operationalized through digital media.

1. This narrative of restoring humanity by embracing mediated tactility echoes claims the Canadian media theorist Marshall McLuhan famously advanced in the 1960s, when he argued that electronic media would restore unity to a sensorium fragmented by the rise of visual communication technologies. Media, for McLuhan, extended the senses, but they did so unevenly, resulting in an unbalanced ratio among the five senses. Television, with its mosaic mesh of dots, worked not through vision, but through a synesthetic tactility, as McLuhan explained: ‘the TV image requires each instant that we “close” the spaces in the mesh by a convulsive sensuous participation that is profoundly kinetic and tactile, because tactility is the interplay of the senses, rather than the isolated contact of skin and object.’ McLuhan, Understanding Media: The Extensions of Man (Cambridge, MA: MIT Press, 1994 [1964]), 314. The rise of electronic media ‘dethrones the visual sense and restores us to the dominion of synesthesia, and the close interinvolvement of the other senses’. However, McLuhan’s theory of tactility, in deemphasizing contact between skin and object, leaves one unprepared to grapple with contemporary haptic media, which, as I detail below, operate by capturing, storing and transmitting data specifically for the tactile senses. Ibid., 111.

2. ‘Immersion Champions The Power Of Touch Technology’, Superb Crew, 13 December 2019.

3. See Madayam Srivasan and Cagatay Basdogan, ‘Haptics in virtual environments: Taxonomy, research status, and challenges’, Computers & Graphics 21, no. 4 (1997), sciencedirect.com.

4. Gregory Bateson, Steps to an Ecology of Mind (Chicago: University of Chicago Press, 2000), 462.

5. Frank Geldard, ‘Adventures in Tactile Literacy’, American Psychologist 12, no. 3 (1956):115.

6. Such a perspective echoes the nineteenth-century educator Maria Montessori’s focus on tactile values in her pedagogical programme, which entailed the development of tactile materials aimed at the cultivation of a practised sense of touch.

David Parisi is an Associate Professor of emerging media at the College of Charleston whose research explores the technological mediation and extension of tactility. His book Archaeologies of Touch: Interfacing with Haptics from Electricity to Computing (2018) provides a media archaeology of haptic human-computer interfaces, linking their development to the histories of electricity, psychophysics, cybernetics, robotics, and sensory substitution. He is co-editor of the Haptic Media Studies themed issue of New Media & Society (2017). Parisi's current research project examines the material and semiotic processes involved in adding touch feedback to video game interfaces.